Benjamin Dookram

Sound Designer 🔊🎮 | Using sound to make games great!

Welcome!

You'll find my recent work below...

Pandora

Released Game

Published and released on Steam! A 3rd person hack-n-slash, action, adventure made in Unreal 5. The player utilizes teleportation mechanics mixed with combat to traverse the world and reach the final boss.

Ori and the Blind Forest

Title Screen Redesign

This is an audio redesign I did for the title screen for Moon Studio's Ori and the Blind Forest. A magical forest filled with the beautiful sounds of nature and whispers.

Horizon Zero Dawn

Title Screen Redesign

An audio redesign for the title screen for Guerilla Games' Horizon Zero Dawn. Wind blowing over a snowy canyon with flowing water nearby. Gunfire against monsters echo in the distance.

Sound Design

Sound Re-designs

A re-design (in this context) means taking gameplay or scenes from an already existing game/ film/ show, cutting ALL the original audio to replace it with a fresh, new listening experience. I love doing this because it's a great way for me to exercise my skills and creative thinking as I try to implement my own story through the use of sounds alone.

Projects

Sound design for videogames has become my passion. Everyday I'm learning more and gaining new experiences. Below are projects that have given me the opportunity to work as a sound designer and technical sound designer. I've gained so much valuable knowledge through the development of each of these.

Horizon Zero Dawn (Redesign)

Geurilla Studio's audio team did such a fantastic job with the sounds for their machines, weapons and tools. So taking this on meant I had to come up with a way to change or improve on something already so well done. In addition, this re-design was a little more of a challenge because there were significantly more sounds to cover for.

My goal was to try and create an audio experiences that draws the listener's focus onto different actions than what the original game would have them focus on. So the sound of Aloy jumping, her clothes moving, her hook swinging and catching onto the Tallneck, the rope tensing up from her weight and of course, the sound of the Tallneck charging up an energy wave to scan the area. By far, the charge up and release of that energy was the most complex part. Overall, I was able to be creative in how I went about doing this.

Without strict structure, I simply made blending and processing my center of attention. I wanted the audio to sound somewhat normal while enhancing the impact of certain aspects. Adjusting volumes, equalizers, pitch shifting, reverb and panning was my focus to ensure everything merged together in a way that made sense to hear. I went back and forth adding and removing sounds to try and get it right.

Inside (Redesign)

Playdead did a PHENOMENAL job designing sounds for Inside. The challenging part for redesigning this scene was making it different from the original when the original was just so well done. In addition, I wanted to try speeding up my sound design process. So instead of redesigning every element in this scene, I focused on redesigning the parts that were most vibrant in this gameplay.

I wanted the listener to be aware of the small objects in their environment so I gave the metal plates and the electric lever their own sounds panned based on their location visually. Then I created an explosion in the distance that released a damaging shockwave that quickly moves towards the character. Several different explosion sounds were used for the release and reversed for the build-up effect.

The main focus for this was learning how to balance what was necessary for the listener to hear and what made sense for the listener to imagine. The player doesn't know what caused the explosion. They can only imagine what occurred for it to happen. What they need to know is that the explosion, though far away, is so massive that it released a wave of force that can and will kill them. For their own safety, they're forced to be aware of something unknown.

Demon Slayer (Redesign)

Studio Ufotable's Demon Slayer anime is surely one of the best of all time. I wanted to stay true to the original designer's intentions with the main focus being on using supernatural sword techniques to kill demons. So I tried not to stray too far from the original while keeping it unique and aligned with my own tastes.

I absolutely love thunder storms and lightning so my goal was to make it sound like Zenitsu was summoning the primal power of the storm into his attack. This was done using gusty wind for build-up/ movement, explosive thunder for release and rattling lightning for damage. The audio is meant to represent the intensity of the tempest itself.

The design revolves around story telling based on visuals and imagination. What is the viewer seeing on screen? What's the contextual narrative? What can I do the emphasize that narrative? The viewer can see a demon slayer using a lightning sword technique. The context is a character unleashing an ultimate power. I wanted to use sound to tell the viewer just how powerful this character is.

Ori and the Blind Forest (Redesign)

The original title screen has beautiful music scored over the whole thing. My goal was to use sound to create an ambience that breathes life into the world within the game.

Although the original is extremely well done. I wanted to try replacing it with audio that expresses more of the world within Ori. That's where the sounds of nature like birds, wind, trees, water as well as the magical sounds of whisps and voices come in. They're specifically balanced and panned to immerse the listener in the magic of the blind forest itself.

The UI sounds for clicking and hovering, as well as the fade in/ fade out effects are there to compliment the magic infused ambience while also maintaining the feel of interaction.

Horizon Zero Dawn (Redesign)

Originally, the title screen was scored by a unique theme for the main character, Aloy. My goal was to move away from that and so instead of telling the story of Aloy, I wanted to tell the story of the world she lives in. A world full of the natural beauty and chaos.

Aloy is a strong and capable huntress who's desire to seek out the truth drives her into many dangerous situations. Throughout the game, she is thrust into fights against many different beasts of man and machines alike. This is reflected in the sounds of explosions and monsters in the distance.Close up however, it's peaceful. Water flowing to the right side and snow falling down while a gentle wind blows atop the cliff. Sounds of birds singing, blissfully unaware of the distant dangers. The idea is that even though there chaos from war in the distance, the quiet and serene presence of nature is ever present.

To increase immersion, I wanted this to feel like a POV of someone standing atop a cliff looking down into the world of Horizon Zero Dawn. The flowing water is panned to the right side. The sounds of war are far away and reverberate off the surfaces of the surrounding mountains.

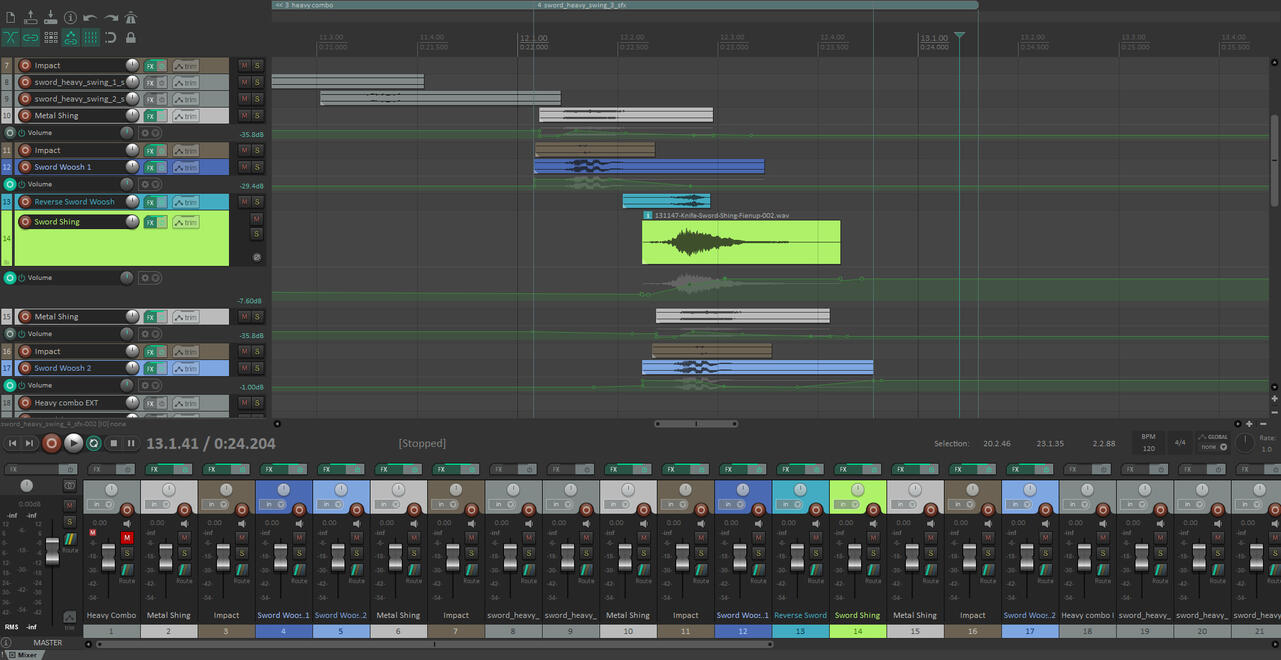

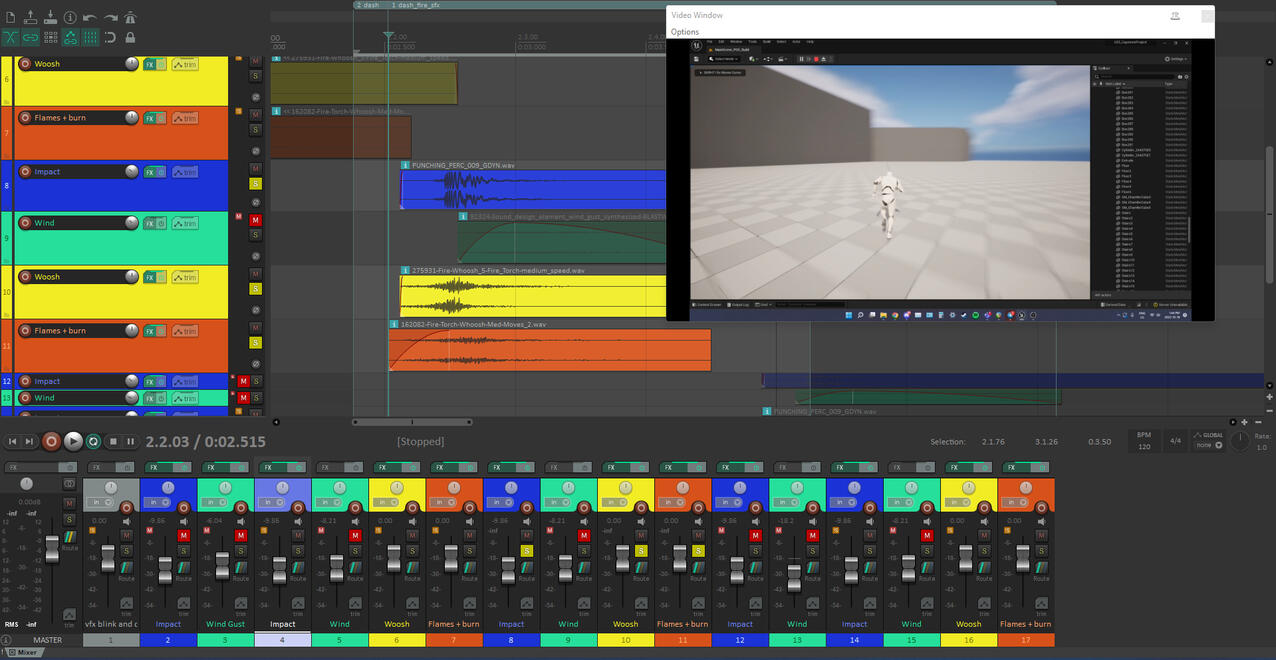

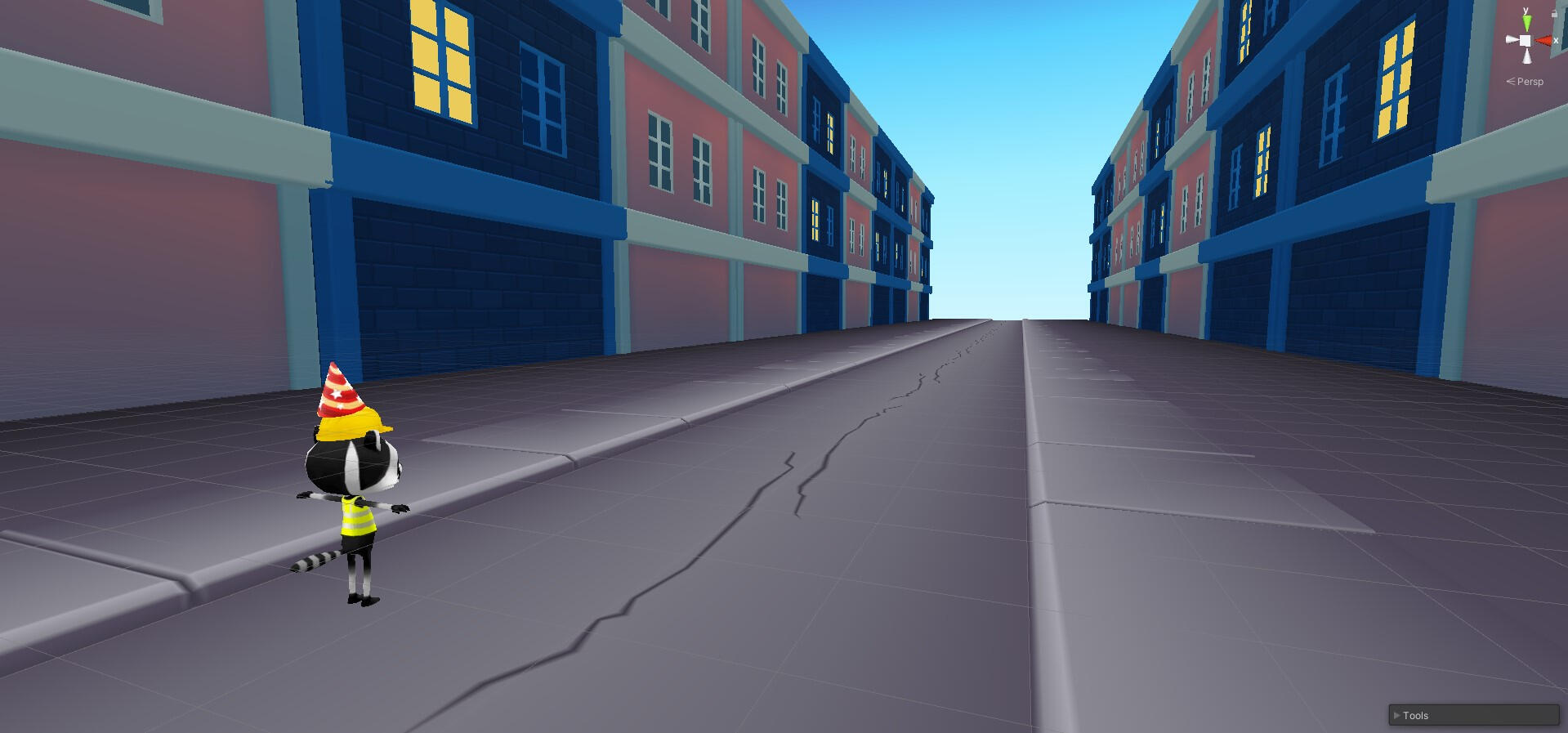

Pandora

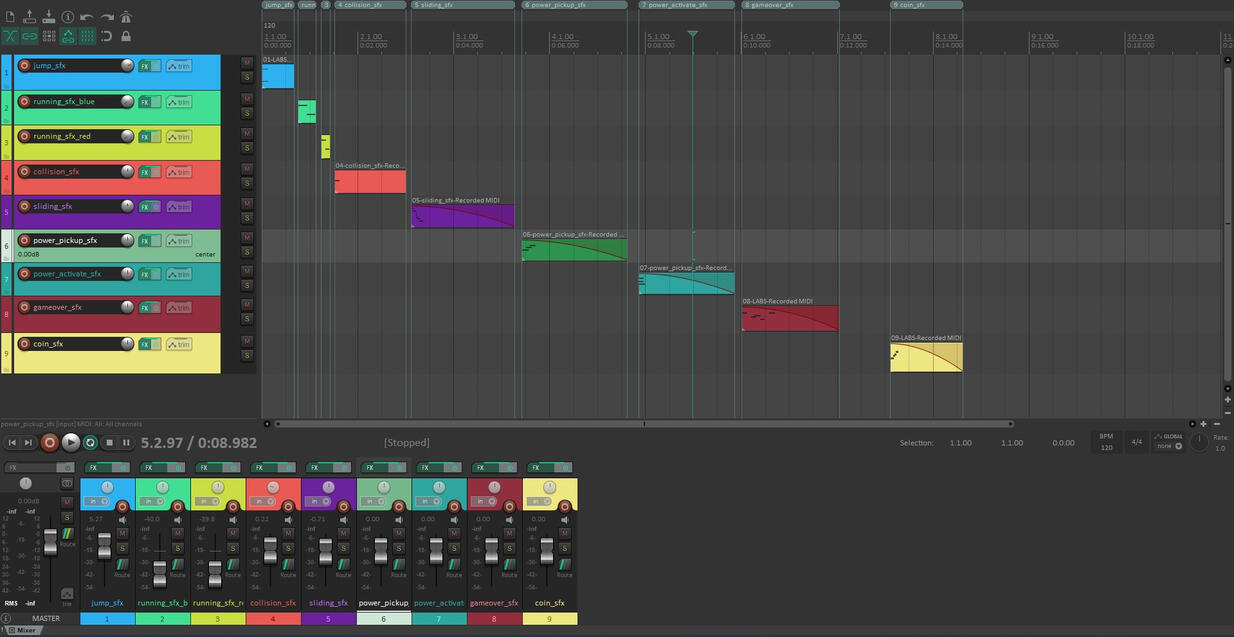

Tools: Unreal Engine 5, REAPER, FMOD

Good news! This game is now published and fully released on Steam for free! Feel free to check it out here: Steam Page or click the image below.

Pandora was my team's capstone project for our final year at Sheridan College. Developed using Unreal Engine 5.03 with audio implemented through FMOD middleware.

As the dedicated sound designer, I made almost every sound in the game. Later, when I took on the role of the sole technical sound designer, I implemented every audio asset into the game myself. Below is a short video that showcases my work on the game audio.

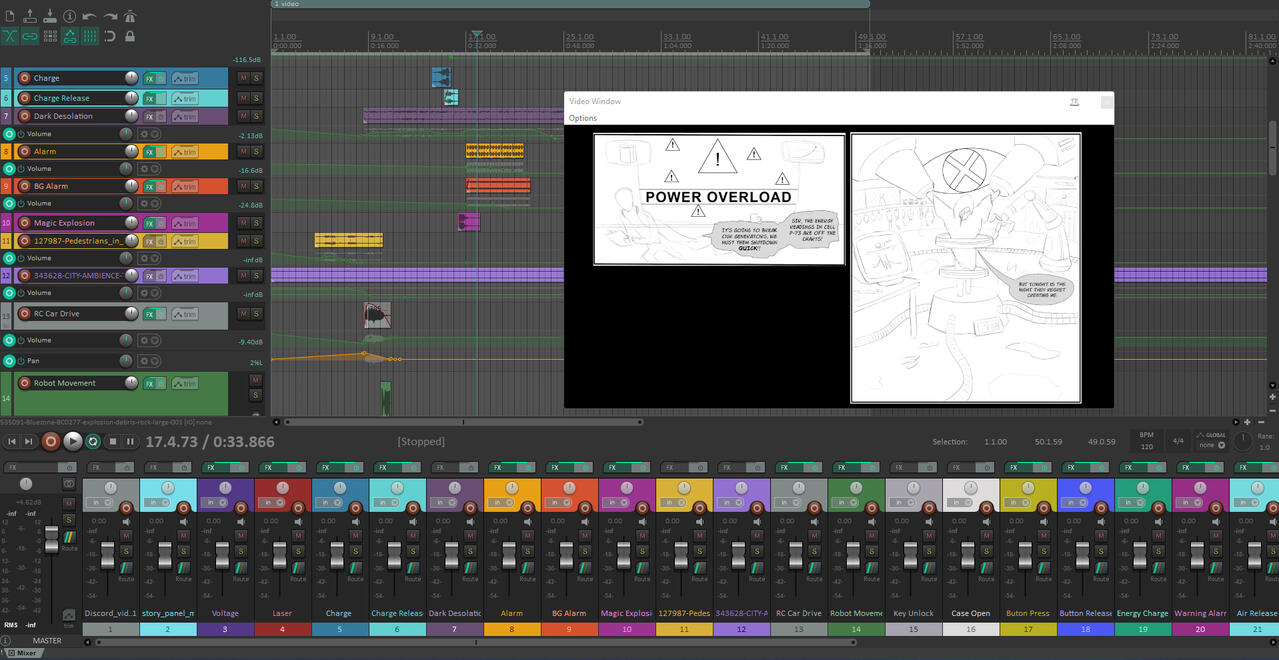

In addition to working on all the game audio, I also worked on the audio for the in-game story panel cinematic that introduces the story to the player. I made and built in all the sound effects for it. The video below show cases the first edition of that cinematic.

At the beginning, my main focus was making high quality, unique sounds. As a result, I made all the sound effects for the main character, minion enemies and the story panel video. As development progressed, my focus changed towards the technical side. I worked on implementing and ensuring full functionality for all audio assets in the game using Unreal 5's built in Blueprints system and animation studio.

Below is a video of my team's MVP presentation. This was about halfway through our 1 year development process. At this point, I had many of the sounds in as well as the story panel video. The idea was to show off a product that aligned with out initial game concept.

REAPER was my best friend throughout the development of Pandora. Every piece of audio I worked on was edited and rendered in REAPER. When most of the audio assets were created, I spent more time in Unreal and FMOD where I was able to program in full functionality for all sounds.

The sound sources were pulled from a paid royalty free library. Using effective layering techniques, I made all the sound effects and ensured proper timing using in-game footage. I worked closely with my team mates so I became used to explaining and communicating my design process to the entire team. I would take in feedback from them, as well as playtests and finalize the sound effects. After the sounds are completed, I render them and send them into FMOD.

Once in FMOD, I would organize them into the proper banks, attach the necessary filters and parameters and finally build them into the project where I could access them via Unreal Blueprints. In doing this, I created many sound systems, including interactive music for the final boss.

This project proved to be an amazing opportunity for me to collaborate with a variety of developers and testers to build up my skills as a sound designer.

Interactive Music in Unreal 5

The following is a dev log I wrote about my experience in implementing interactive music in Unreal Engine 5. I faced many audio challenges throughout development but this was the first one and I spent days troubleshooting to get this functioning properly. When it was finally done, I was all too proud of my work. So why not show it off a bit?

At the time of writing this devlog, I consider myself to be a noob. An audio noob. To be more specific, I am an audio noob striving to learn about how sounds work in videogames. This devlog is about my experience in working on my capstone game, Pandora in Unreal 5. As the dedicated sound designer on my team, I worked with all the sound effects, voices and music. To clarify, I didn’t make the music. Instead, I was lucky enough to collaborate with students from Sheridan’s MSSS program who specialize in composing high quality music. We had several meetings and my job was simply implementing music into the game. “Simple” was the plan. The idea was that they would make tracks, send them to me and I would implement them into the game In Unreal 5 using FMOD middleware. In truth, that’s all it was until I was introduced to a term called “Interactive Music”.

According to one of the MSSS students who introduced me to this concept, interactive music refers to music that changes based on changes in the game. Let me ask you this, have you ever been in a game with one or mor boss fights? In many games, boss fights have intense, action packed, battle music playing. At a certain point the fight, the fight will pause and the boss will become stronger. When the happens, the music becomes even more intense. Maybe it changes key, or it becomes faster or louder or something to indicate, things just got REAL. As a result, the player becomes more immersed in the battle. The challenge increases, so the player feels the need to rise and meet that challenge.

In Pandora, we have one big boss fight. It’s been called the “Shining Moment” of our game. We made it clear to the MSSS students that we needed the music to be thematic and epic. Combining this with the previously mentioned interactive music concept. We decided to split our boss music into layers add on to each other based on the health of the boss. When the boss hits certain levels of health, another layer of music is added on make increase the intensity of the fight experience.

I, being the audio noob I am (and knowing nothing about music or implementing music in Unreal 5) challenged myself to figure out how it would work. I already had knowledge and experience working with sounds in FMOD so I was confident that if I learned how to make interactive music work in FMOD, I could easily apply it to our game. I did some research along with another team member to figure out whether or not this was doable with FMOD. My team member sent me this video:

Shoutout to Andrew Hind Music on Youtube. Here is the link to their channel.

Here, I was introduced to the use of “parameters” in FMOD. Parameters are like variables that can change the properties of an FMOD when being played. When I call on an FMOD event to be played in the actual game build, I can also call on that parameter to change how the event is played. In the video, he has music that is split into 4 layers and a parameter called “Intensity”. As intensity raises, the volume of each track is increased. If this were in a build, it would make it so something happens in the game to increase the value of Intensity. When it’s increased, more tracks are heard and the player can listen to a ensemble of music tracks while experiencing the game.

I had to apply this to my own situation. Our boss music was months away from being composed so I had to use filler music stems to test out the use of parameters. One of the MSSS students sent me a track to a song from Disney’s Pirates of the Caribbean. I followed along with the video and this was the result:

So now I have interactive music in FMOD and it works! I have layers of music and the volume of each layer is being controlled by the parameter “Intensity”. By the way, I did in fact copy the name of the parameter in the video but it can technically be called anything. I chose to call it Intensity because just like the video, I want the parameter to control how intense the music is. The idea is that as the Intensity parameter goes up, the feeling of actual intensity increases in the boss fight. Now that it’s working in FMOD, I need to decide how it’s going to work in our Unreal 5 build.

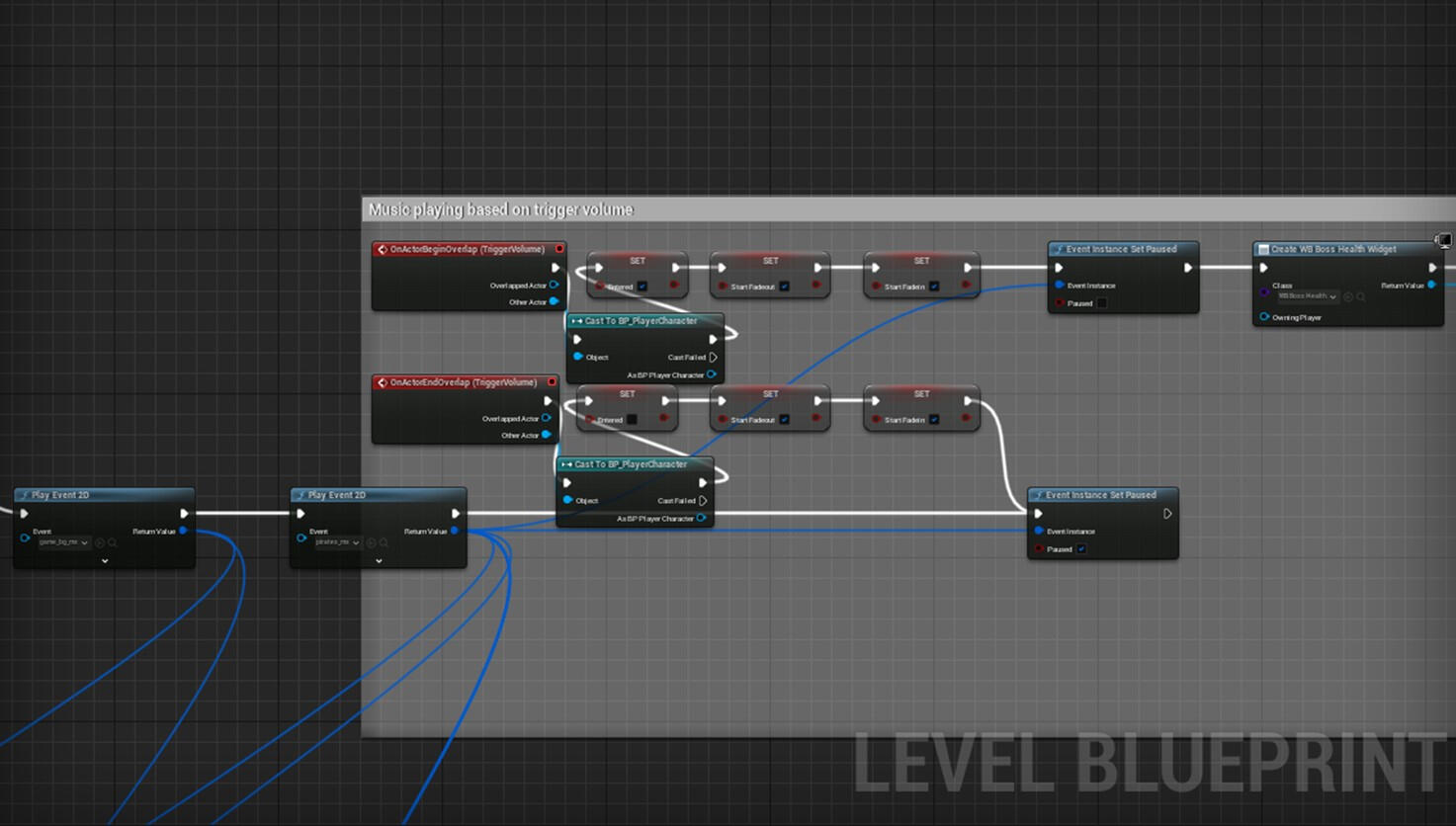

The FMOD file is connected to the build and the boss music event is accessible in Unreal 5. The next step is figuring out exactly how the music will be set up in the build to allow the game it self to affect the Intensity parameter. The first challenge was figuring out how to control the playing of the music. I decided to set up a trigger box around the boss area so that when the player enters the box, the background music stops while the boss music starts and vice versa when they leave the box. In addition, I worked with our programmer to set up variables that allowed the music to fade in and out instead of just cutting each other off.

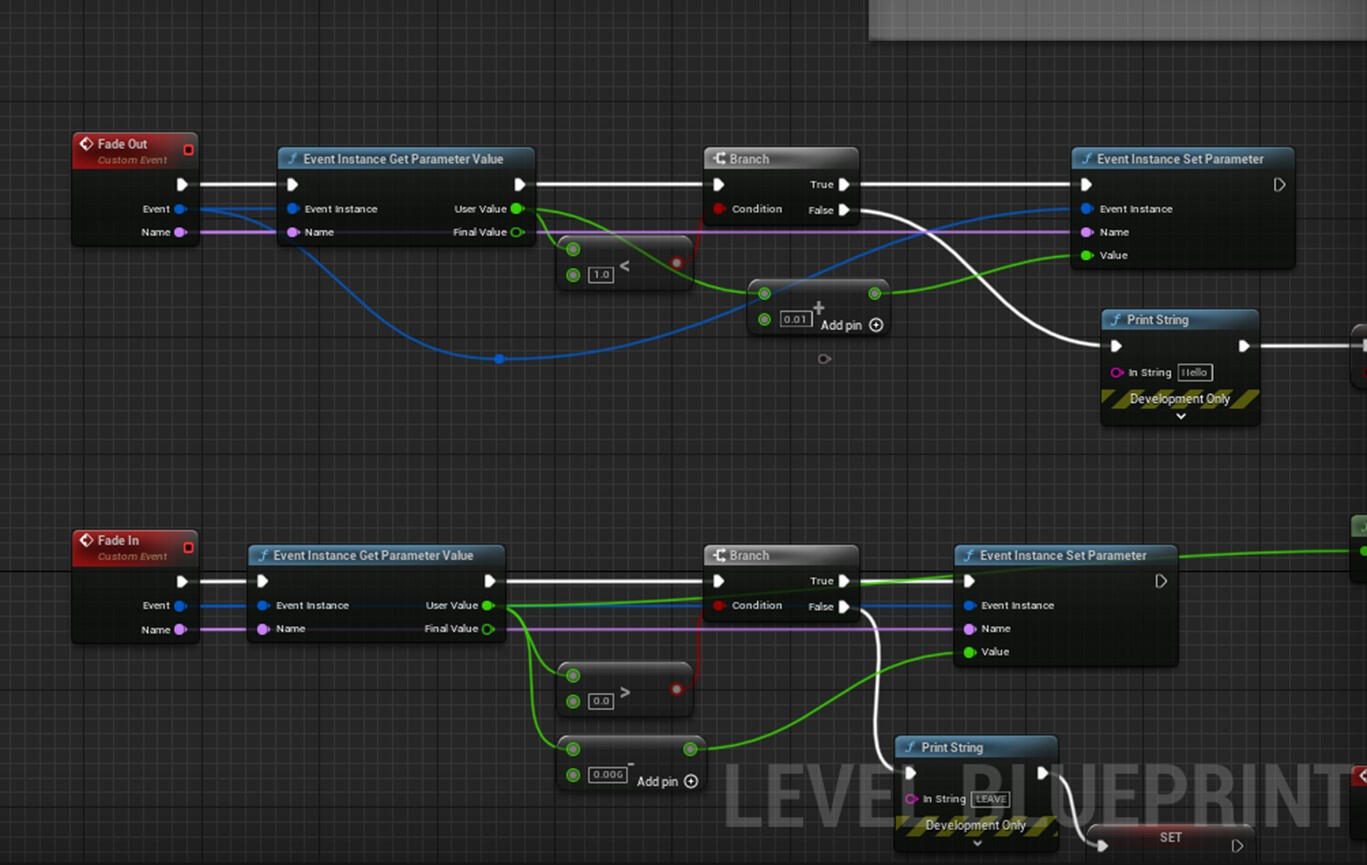

The image above is the set up for the trigger box controlling the music. The image below shows the fade out and fade in variable being set up to allow the music tracks to gently fade in and out. This was crucial to set up first so I didn’t run into playing issues later.

Now the playing of the music is controlled, I need to actually implement the boss music and make it work with the parameter. I’ll spare the details on how much trouble shooting I had to do but I worked on this for a few days before I got it. I kept trying lots of different things. I got help from one of the music students my team collaborated with. I even used AI to try and help. Eventually, I managed to find the right nodes and get things running.

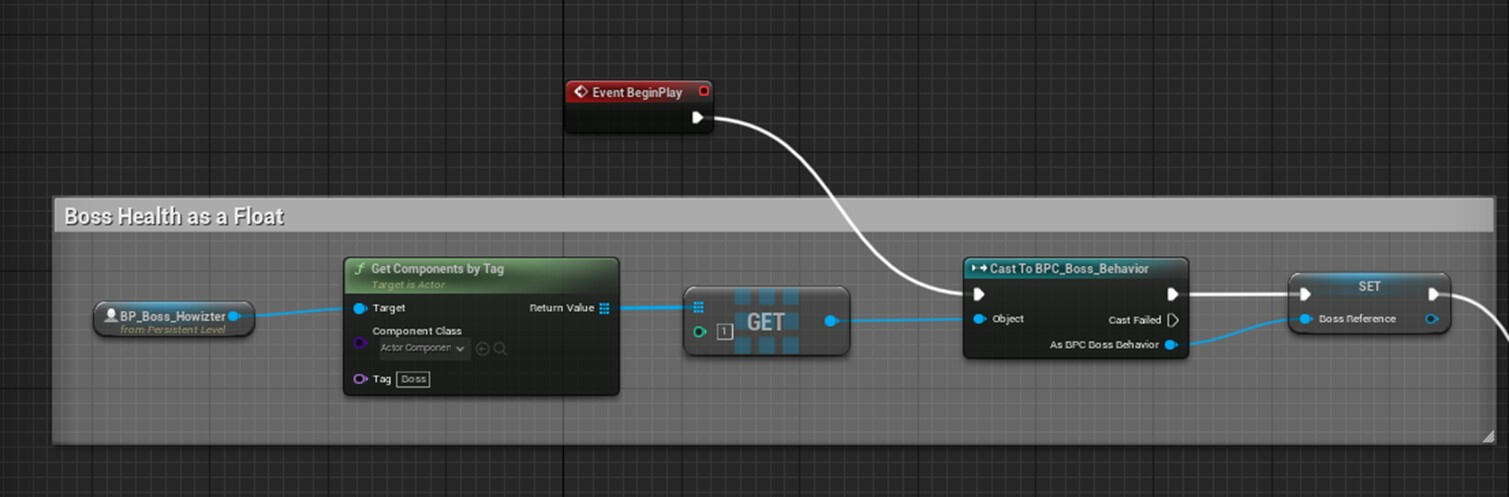

Here is a GIF that shows the blueprint set up:

So here’s what I had to do. The first thing was get access to the boss’ health. This split it into two values. The boss’ current health and the boss’ max health. When the current health was lower than a certain percentage of the max health, the Intensity parameter would increase to allow the next layer of music to be added on. It works like this:If the current health is less than or equal to max health, set Intensity to 1. This allows the first layer to be played.If the current health is less than max health BUT below or equal to 70% of the max health, increase Intensity to 2. This adds on the second layer so now the player can hear the first and second layer.If the current health is less than max health BUT below or equal to 30% of the max health, increase Intensity to 3. This adds on the third and final layer so now the player can hear layers 1, 2 and 3.

Here is a video of the blueprint when everything was done and working correctly.

Here is the in-game result:

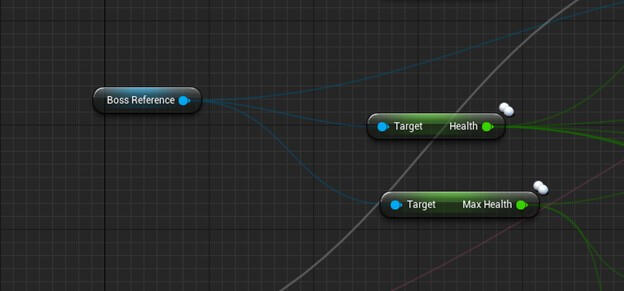

The biggest challenge with this was getting access to the boss’ health. Thankfully, our programmer was more than willing to help me accomplish this. As it turns out, you have to make a reference to the boss object in the scene and then get the actor component. Then you can cast to the boss behavior to access the health.

Once that was done, I was able to get and use the boss’ health as a current health value and a max health value.

As I write this devlog, our game is much more up to date. As a result, some of these have changed. Now we have the actual boss music and not just the filler Pirates music (as nice as it sounds) and we also have the layers fade in instead of just jumping on top of each other. I certainly learned a lot in working on this and it was definitely a challenge for me. However, without this set up, I would not have been able to implement it into our current build. I’m extremely thankful to have done this before hand instead of leaving it to last minute. Now, Pandora’s boss battle has the epic music it deserves.

Thank you to those who took the time to read this. If you’re looking to do something similar, I hope this helps.

Audio in Hellblade: Senua's Sacrifice

This is a deep dive analysis I completed about the use of audio in Hellbladee: Senua's Sacrifice. I had never played the game before but I learned about the complex audio in this game and decided to see it for myself. I was shocked. I learned so much about manipulating audio to enhance the player's experience.Below is the video presentation (will be re-worked in the near future) with a link to the script. Below that is the analysis I wrote out.

Introduction

Audio is undoubtedly one of the most crucial aspects of the experience players have while playing Hellblade: Senua’s Sacrifice. It’s used in so many ways to ensure the player feels immersed and receive certain meanings/ themes. Audio isn’t just presently there to keep the player entertained or satisfied. The audio works make an impact on the player’s mental state while playing the game and directly connects the player to the main character, Senua. Much like Senua, the sounds that the player hears affect the way they perceive the world around them. The idea of this review is to use research to figure out how this is accomplished. To analyze how the developers utilize audio in this game and the effectiveness of their decisions regarding sound design.

Thesis

The developers of Hellblade use audio to communicate Senua in way that invokes emotional and mental states through complex sound design. Although they are separate, both Senua and the player are meant to be connected within a sense of oneness that causes the player to experience the world within Hellblade just as Senua would. Obviously, not in a physical sense as the player doesn’t get hurt (hopefully) as Senua would. However, in an emotional and mental sense, the player becomes so immersed in the world through Senua’s perspective that they will be forced to deal with stress, anxiety, helplessness, anger, fear and so many other emotions. This is not done through sound alone but without sound, this experience could not be accomplished to the extent it currently is.

Use of Binaural Audio

Firstly, let’s talk about the most important method they used when implementing audio. Binaural Audio refers to the reproduction of the real-life experience of listening to sounds. It’s all about setting audio up in a 3D space to make the audio sound like it’s coming from different locations around the user. In Hellblade, it’s used to enhance the overall immersion. Effects like thunder booming high in the sky amidst the whooshing wind is an example of how sound immerses the player in the environment. The sound isn’t just present, it surrounds the player and emanates from Just like thunder in real life isn’t just loud, it’s high in the sky, above everything. Another more vibrant example is when Senua has to run through a burning environment. Without spoiling too much, the player hears a variety of sounds that draw out emotions from the player. They can hear crackling wood, rising flames, collapsing structures, agonizing screams and much more. All the sounds are combined to enhance the environment but each sound has its own location in 3D space.

The idea is that binaural audio can be used to imitate a real life experience while merging it with the fictitious world within the game. Using binaural audio to create spatial sound is what caused Hellblade players to have such strong reactions when playing the game. They would hear voices speaking from different directions and the result causes their emotions to either rise up or descend. This also worked to close the gap between the player and the character, Senua. Whenever Senua had to deal with dangerous, life-threatening situations, the player could feel her desperation to live. Whenever the environment began to change into something darker and it seemed like the world around Senua was being corrupted, the player could feel her anxiety. This could also be why some players felt mental illness when playing Hellblade.

Mental Illness

Senua is a character that is forced to deal with a severe case of psychosis. Psychosis is a mental disorder that changes the way a person perceives the world around them, causing them to eventually disconnect from reality. Hellblade is based on 8th century Nordic/ Celtic culture. As a result, Senua’s psychosis is recognized a curse, rather than a treatable mental illness. So instead off treating it to make herself better, Senua accepts it as part of her life. She hears and sees things that aren’t actually there throughout the entirety of her journey. This works to shape how the player perceives both the world and Senua’s mental state.

The developers consulted professionals and patients to ensure that psychosis was effectively communicated through the gameplay. According to some of the patients, they could hear voices from different locations in their environment. This is where the aforementioned term, binaural audio, comes in. The players hears so many different voices throughout the game while the most important and vivid voices sound like they’re in extremely close proximity. As if someone is speaking/ whispering directly into the player’s ear. In some situations, these voices are helpful as they can guide the player to make the right decision. However, they can also be doubtful and negative as they laugh at or talk about Senua’s incompetence. They can also strike fear and cause the player to panic. This leaves an overall mental instability on the player. It’s proof that the developers managed to accurately simulate the effects of psychosis in the game and implement those effects on to the player.

Connecting Audio and Story

Despite the fact that the game has so many dark themes that speak to such immense evil. The very beginning of the game has a calming and gentle opening. It begins with a narration voice talking to the player in 1st person perspective. It comforts the player right before it leads to the introduction of Senua. When doing this, the voice moves from one side off the headset to the other side. Immediately, the player becomes aware of the audio’s 3D nature and the foundation of their connection to the game is established through an emotional reaction to that audio. The rest of the game builds on this foundation as it uses audio to enforce the narrative. As the player learns more, they begin to piece together the story of Hellblade and how

The truth is the story and its corresponding themes could not have been communicated without the audio. The writer, Tameem, admitted that he couldn’t have written the story if he just sat down and tried to write it. The story is told through events and the player perceives these events based on what they see. However, the story contains aspects that could not be shown but were still necessary for the player to fully understand the Senua’s world. This is where the utilization of audio shines. It’s the audio that communicates Senua’s mental illness and her twisted perception of reality.

A good example is how the player discovers who Senua is when she experiences flashbacks through hallucinations. These moments give the player information about Senua’s past relationships, experiences and they work to define who she is in the player’s eyes. Perhaps they can relate to her in some ways, or they could criticize her past decisions. They could even grow to admire her for what she’s trying to accomplish, despite what she’s been through. Whatever it may be, these moments help the player to empathize with Senua. As a result, the voices speaking to and about her hold so much more weight. They don’t just affect the gameplay, they affect how the player feels about Senua and her current situation. Once the player understands her, the experience of her psychosis and the voices she’s trapped with become more real.

Conclusion

Hellblade: Senua’s Sacrifice uses audio in such a unique way. It seems the developers didn’t just use sounds to make the game entertaining, but rather use sounds to create a surreal experience. That’s why it has such an impact on its players. The game tells the story of a warrior who embarks on a dangerous journey. The audio turns that journey into an experience that reaches out to the player and takes hold of them on a mental and emotional level. Through binaural audio, people who play the game are forced to step out of their own world, and into the twisted world of Senua as she strives fight against her own version of hell. In truth, there’s so much more to unravel when it comes to the audio of Hellblade. But these were some key components that helped me to better understand how audio can create strong atmosphere in a game and I hope it’s managed to teach you about the power of audio too.

Sources

PrimaryHellblade: Senua’s SacrificeSecondary

HELLBLADE: SENUA’S SACRIFICE – SETTING THE GOLD STANDARD FOR AUDIOHellblade is an audio nightmareThe Sound Design of ‘Hellblade’ Makes you Feel Mentally IllThe Incredibly Sound Storytelling of Hellblade: Senua’s SacrificeTertiary

Image 1

Figure2

Figure 3

Figure 4

Reality Switch

Unity

Over the summer of my third year at Sheridan, my team and I were accepted into Sheridan's EDGE program for our internship. We built a game development business venture that strived to make meaningful games under the name "Enygma Games". This was our first (and only) project.

As part of the Enygma Games team, I helped to shape the concept of a game that strives to spread awareness about the environment. Players progress through levels by switching between a healthy, thriving world and a ruined world that resulted from ignoring the deterioration of the environment.

The sounds I created did NOT make it into the final build due to a change in the prioritization of our venture. However, the sounds I did make and the use of FMOD still helped me to grow as a sound designer.

Using REAPER, I tried to make sounds that established the theme of the game. Simple and immersive enough to make the player believe in the world, as well as the consequences within that world. After that, the sound files were put into FMOD, polished and then implemented into the build through the FMOD plugin for Unity.

Feel free to listen to the sounds using the button above.

Although this game was incomplete, I was able to use REAPER to create technology, based sounds that indicate a futuristic setting. In addition, this was my first project using FMOD so I was able to get a grasp on how middleware works.

We Come in Peace

Unity

Design Challenge Week was an event exclusively for Game Design students at Sheridan College. The challenge gets Game Design students across years 1 to 3 to team up, get to know each other and build a game from scratch in a week. This was my second time doing it and the theme was casual arcade style.At the time, I just wanted to take on work that no one else had covered. So, I was tasked with creating cool sounds for our game. This was my first time working on audio in a game. I didn't realize at the time but this was the beginning of my experience with sound design.

I was part of a small team of fellow Game Design students. We had a programmer, two artists, a UI designer and myself. My job was create sounds for an 8-bit style game in which the player takes on the role of an astronaut who needs to escape a hostile alien planet. I made the background music and the SFX for combat.

I used an 8-bit sound effect maker called SFXR and the Scratch 8-bit music maker to create simple, but effective classic arcade game sounds. It certainly wasn't as complex as my more recent work but it was a fantastic first time experience that I will never forget.

Level Switch

Unity

Over the course of our third year, we were assigned the task of making a game that relates to a modern social issue. I was the primary game designer and sound designer for this project.

This game started out with research into how COVID affected the gender wage gap in the workforce. After brainstorming, I came up with the idea to create a game that communicates suffering versus privilege through two different states of difficulty.

As you can see, we had to use filler assets but that didn't stop us from building our vision. Using REAPER with the Spitfire VST plugin, I created instrument-based sound effects to set an almost lo-fi style tone throughout the experience.

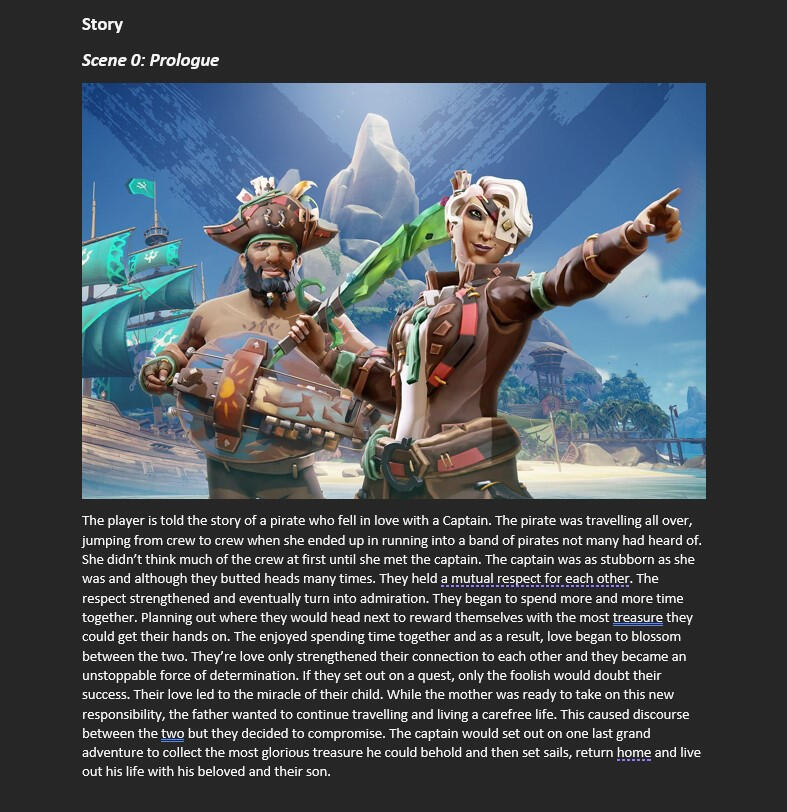

Tale of the Lost

Unity

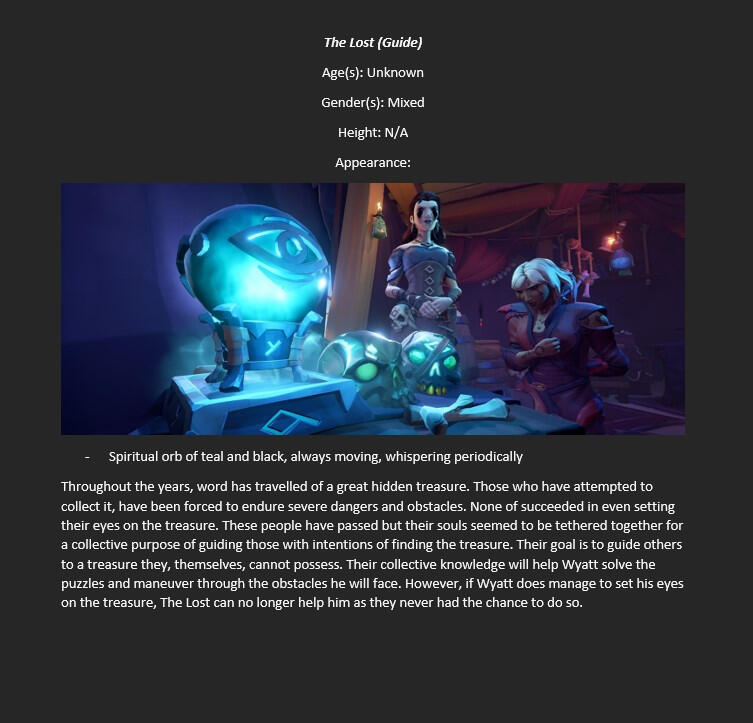

This is the result of narrative game concept that I worked on with another designer. We took inspiration from the game Sea of Theives.

The game is designed to tell the sad and mysterious story of a pirate as he discovers his past. Along the way, the player meets different characters/ entities that are used to teach the player how to interact with the virtual world around them. This eventually leads to an intriguing plot twist that concludes the story, while starting another.

Working with my team mate, we created a narrative concept ready for a team of developers to pick up and use. Our result was a narrative bible and a beatmap that outlines the progression of the game.

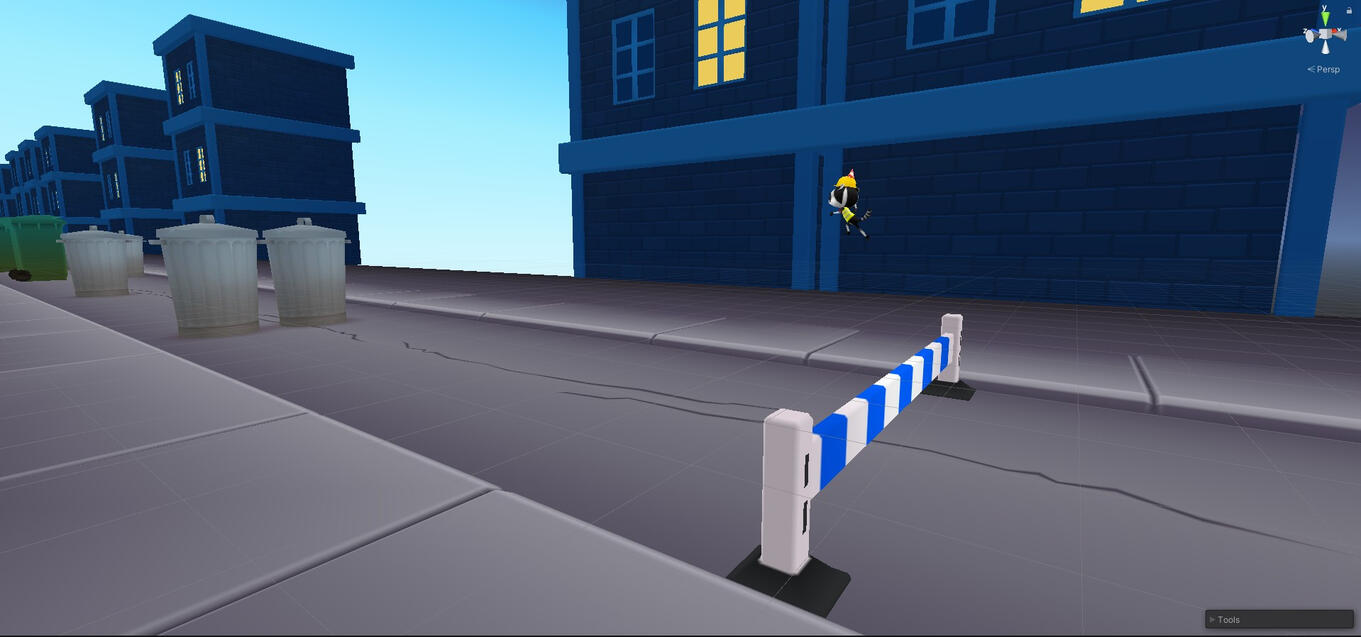

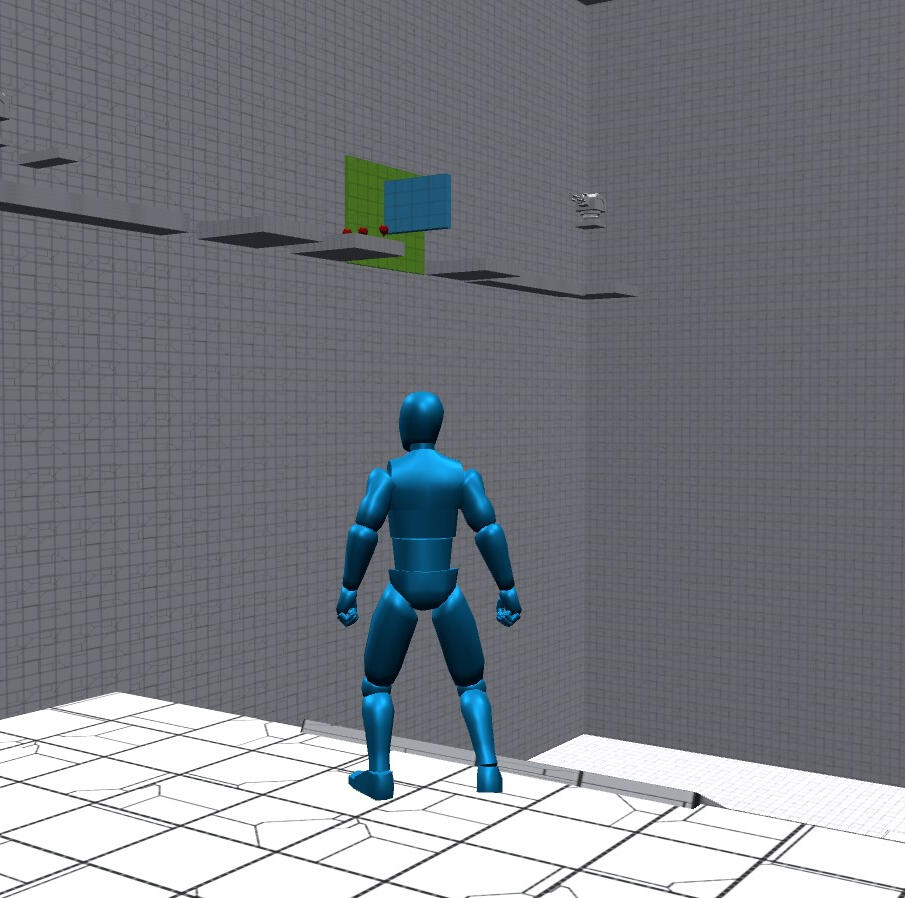

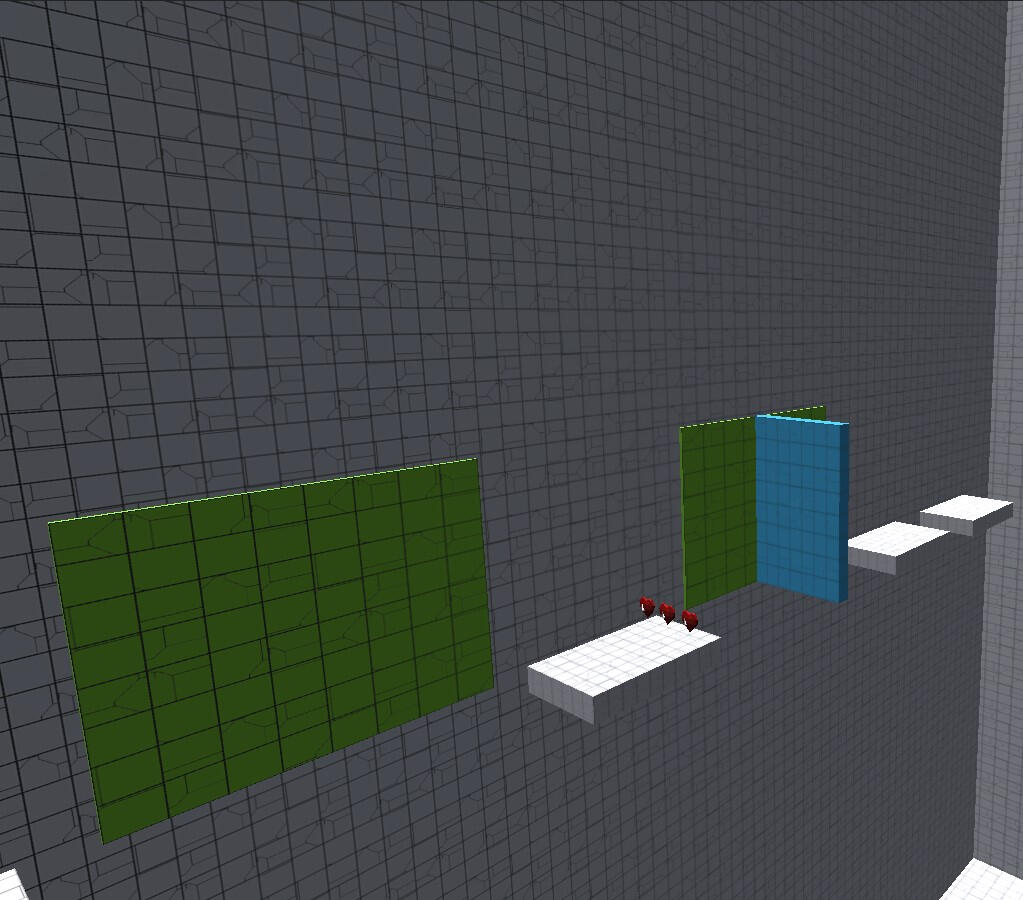

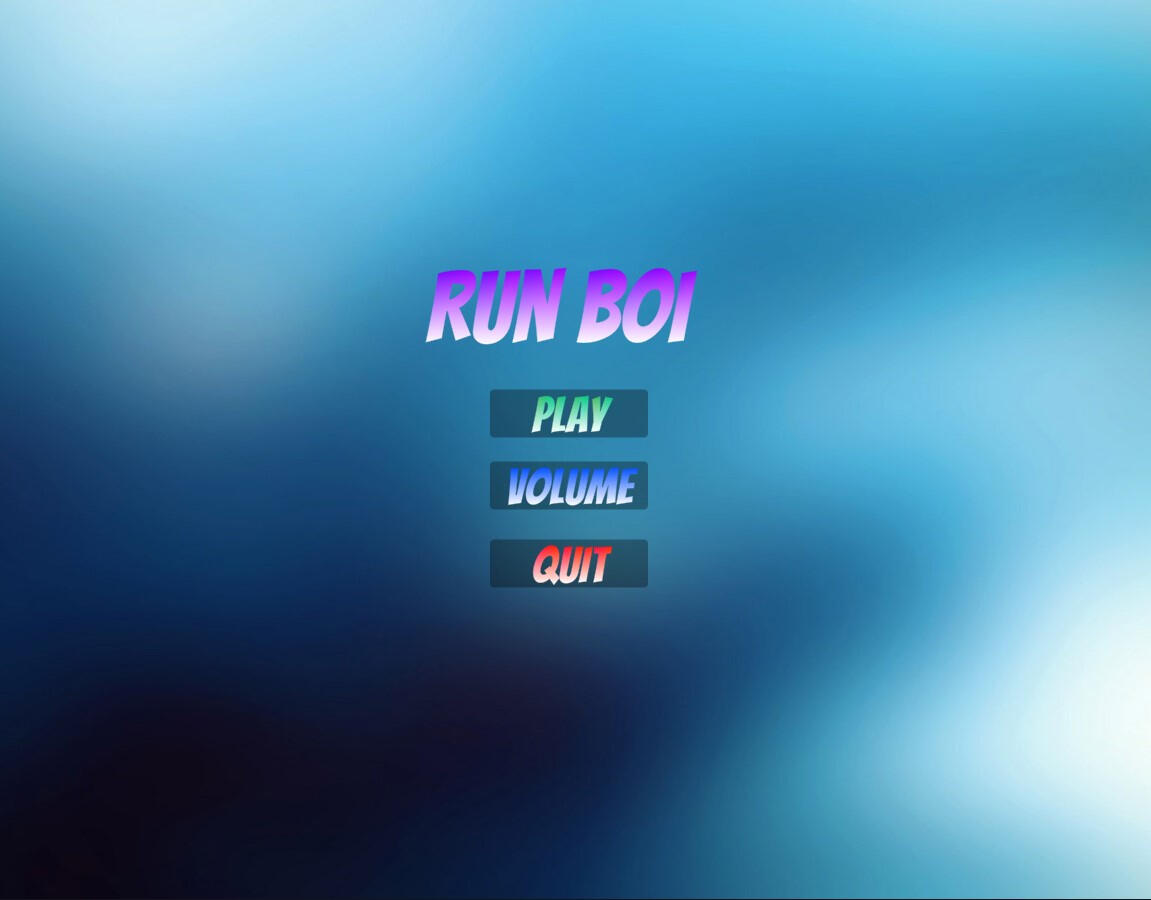

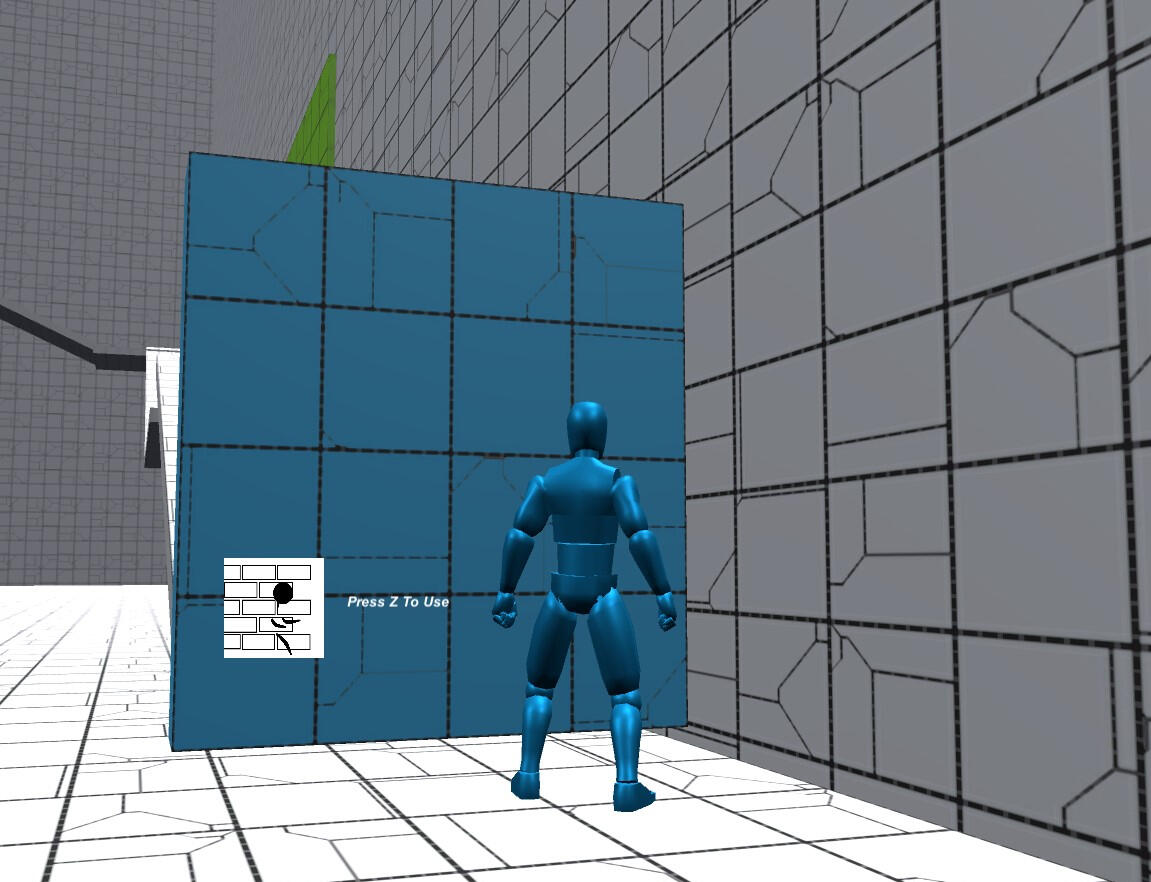

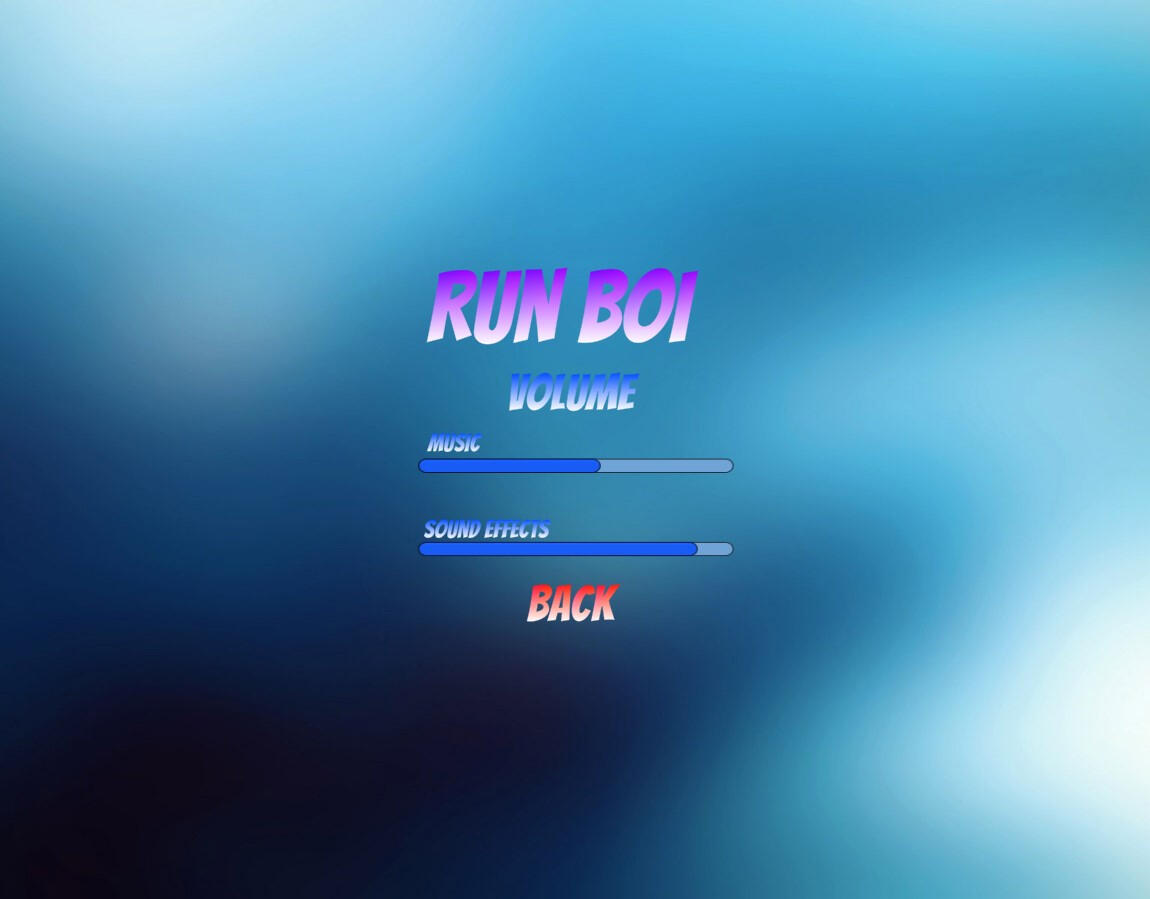

Run Boi

Unity

This was a second year project that myself and two other students worked on. I was a game designer who also worked on the UI and overall play experience.

At the time, Fallguys was trending so we wanted to make a game similar, but with a twist. The idea was to make a unique 3D platformer with a variety of obstacles that challenged the player.

During the development process, I worked closely with my team mates to ensure the experience was defined and aligned with our core pillars. I designed and implemented a basic user interface and menu system. My goal was to make it simple in nature and effective in communication.

About Me

Education

Honours Bachelor of Game Design

Sound Design

Game Programming

Game Design

Narrative Design

Skills

Proficiency with Digital Audio Workstation: REAPER on Windows

Experienced with audio implementation via middleware (FMOD & Wwise)

Utilizing VSTs and Plugins for audio mixing/ processing

Using sound effect libraries

Working with source control (Perforce)

Familiar with audio programming (Blueprints & C#)

Effective audio work in Unity and Unreal game engines

Strong self-management and communication

Hello! I'm Ben and I am EXTREMELY passionate about sound design in games and other media. Every project creates an experience that acts as a gateway to another world. As designers, we get to define that world and enhance it as we see fit. When it comes to sound design, I combine theory with application to ensure a fulfilling and meaningful experience for myself, my team and of course, the user.

For games specifically, my goal is to effectively merge my audio skills with my game design knowledge to create truly immersive atmospheres. By working with sound effects, voices, music and ambience, I strive to create atmospheres that align with the games core pillars . I want players to connect to the world within the game so they can enjoy the experience of escapism to the fullest.

Software

REAPER DAW

FMOD Middleware

Wwise Middleware

Unreal Engine 5

Unity Engine

Perforce

Google Workspace

Microsoft Office

Adobe Cloud